A common concern for large and/or public facing organizations is ensuring accessibility compliance, and a common troublemaker is missing alt attributes on image tags. In some cases, the moment this becomes a problem is when it is brought to management’s attention that thousands of out-of-compliance images on the site might cause a legal headache. Until recently, the options for solving this usually required some mixture of tedious brute force work or outsourcing.

Large Language Models are starting to make tasks like this much more palatable to take on in-house. LLM capabilities have expanded beyond text and there are now numerous “modes” of models available where the input and output types can be images, video, or audio. For this exercise we are mostly concerned with “image-to-text” models which tend to work well for generating a text caption from an image input.

I wanted to quickly explore a few of the “image-to-text” models available to get a sense of processing time and quality of the text outputs. I created a simple script that would process each image in a directory with three different image-to-text models that I selected for comparison. I was interested in how long it takes to process the set, and how good the quality of output would be for each model.

The steps to getting a caption from an image are simple to get started with. For example:

# /// script

# requires-python = ">=3.12"

# dependencies = [

# "torch",

# "transformers",

# "Pillow",

# ]

# ///

from pathlib import Path

import torch

from transformers import pipeline

# List of models to test

MODELS = [

"ydshieh/vit-gpt2-coco-en",

"Salesforce/blip-image-captioning-large",

"microsoft/git-base-coco",

]

def process_image(image_path: Path, models):

"""Process a single image through multiple models."""

print(f"\n{image_path.name}")

for model_name in models:

try:

# Initialize model

captioner = pipeline(

model=model_name,

device=0 if torch.cuda.is_available() else -1)

# Generate caption

caption = captioner(str(image_path))

# Get caption text

caption_text = \

caption[0]['generated_text'] if isinstance(caption, list) \

else caption

print(f"{model_name}: {caption_text}")

except Exception as e:

print(f"{model_name}: Error: {str(e)}")

def main():

"""Process all images in current directory."""

# Get all image files in current directory

image_files = list(Path().glob('*.jpg')) + list(Path().glob('*.png'))

if not image_files:

print("No image files (jpg/png) found in current directory!")

return

# Process each image

for img_path in image_files:

process_image(img_path, MODELS)

if __name__ == '__main__':

main()

Running the script in a directory containing images will start outputting captions generated from the three models. (You may see a bunch of console warnings for CPU-only or other factors). If you have uv installed, you can run it via

uv run https://gist.githubusercontent.com/unbracketed/1b001f5b655a9e65c7a5201a33c0ff91/raw/image-caption-demo.py

You can view the code in this gist. On my local machine I can run via uv run model-comparison.py imageswhere images is a directory next to the script.

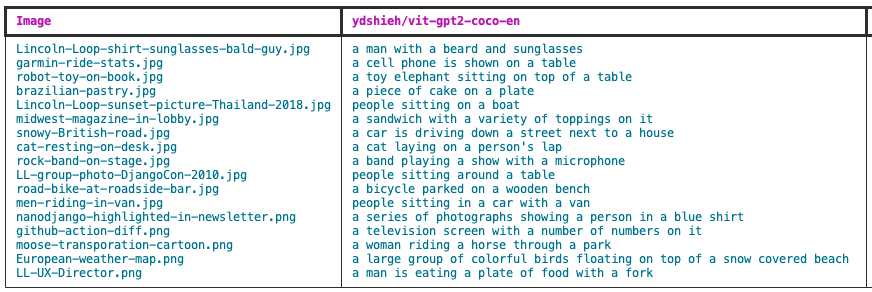

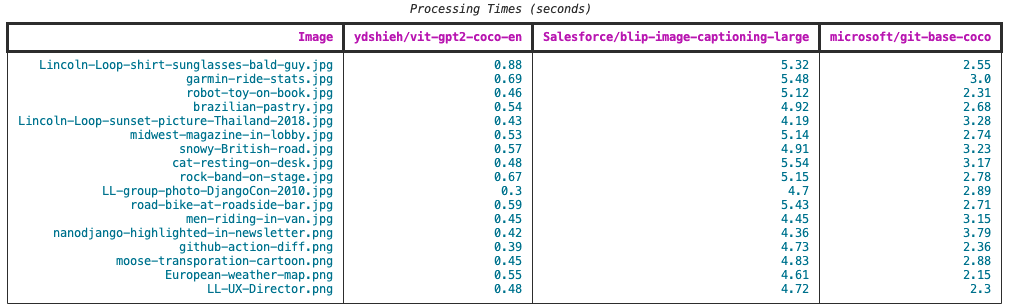

Using some images I hand selected from our general channel in Slack, I ran the tool to produce a couple of tables. The first shows the generated captions for each image across each model and it is formatted for a wide monitor, so I’ve truncated a couple of the model columns for display here. (The script includes a useful CSV output option which is more suitable for analysis.) The second table lists the processing times. A few of the images in my set failed during processing and weren’t included in the results.

Example of generated captions using vit-gpt2-coco-en for a set of images

Console output from running image-to-text model comparisons

A glance at the processing times shows us that each model has its own performance characteristics and the numbers suggest that for speed the winner is vit-gpt2-coco-en followed by git-base-coco and blip-image-captioning-large. There appears to be an order of magnitude difference between the speed of these models. Speed might be an important consideration for some tasks, but many organizations will care about the quality of the generated data. For my image set, I put a small bit of effort into selecting a range of subject-heavy to more abstract images to how the models would cope. Let’s compare the output for a few selected results.

Lincoln Loopers in Portland at DjangoCon 2010

| model | time | generated caption |

|---|---|---|

| vit | 0.3 | people sitting around a table |

| git | 2.89 | a group of people sitting around a table with laptops. |

| blip | 4.7 | several men sitting at a table with laptops in a restaurant |

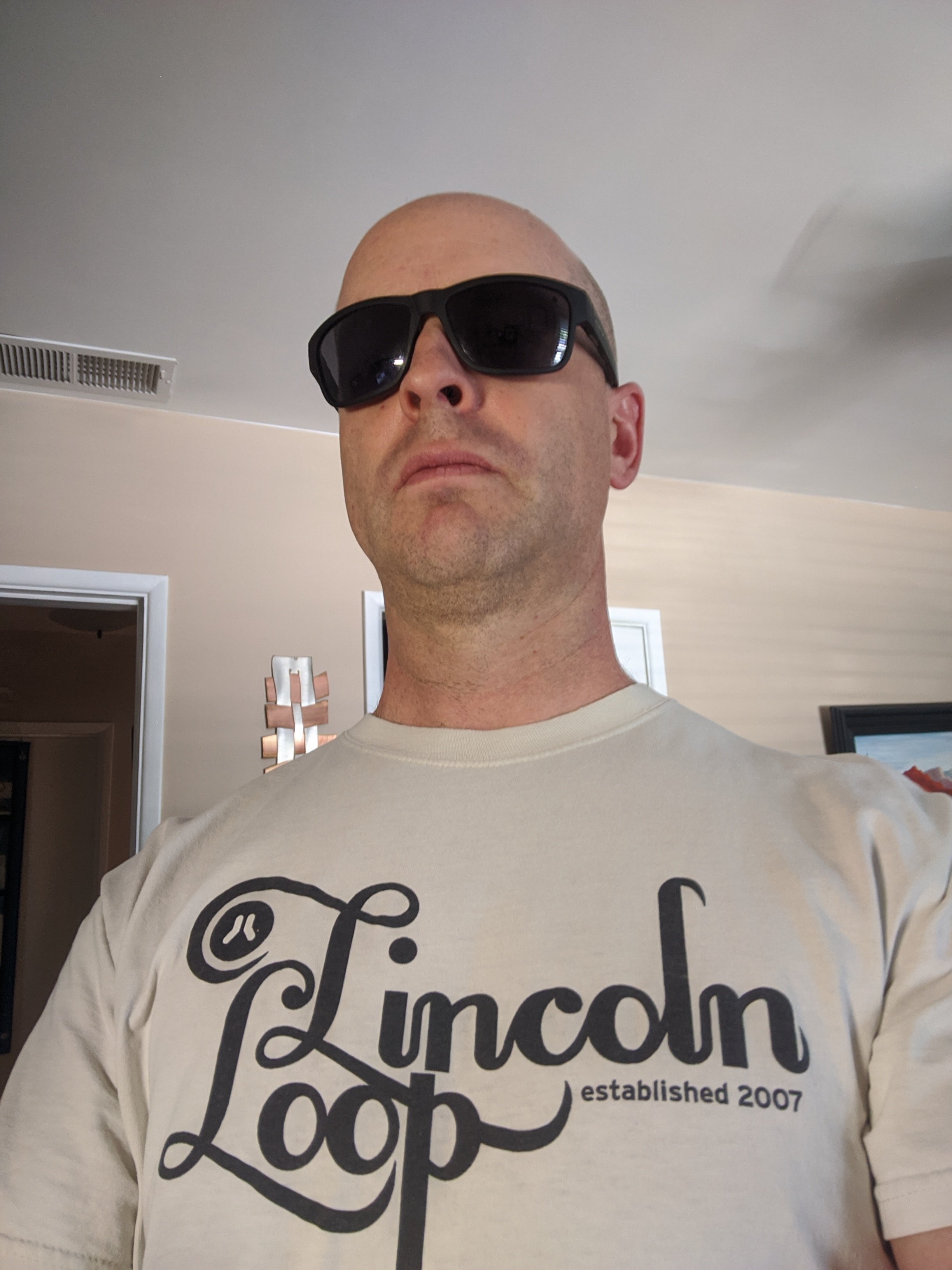

Brian Luft wearing sunglasses and wearing a "Lincoln Loop" t-shirt

| model | time | generated caption |

|---|---|---|

| vit | 0.88 | a man with a beard and sunglasses |

| git | 2.55 | a man wearing a t - shirt with sunglasses |

| blip | 5.32 | there is a man wearing sunglasses and a t - shirt that says lincoln loop |

A Garmin ride computer displaying ride stats

| model | time | generated caption |

|---|---|---|

| vit | 0.69 | a cell phone is shown on a table |

| git | 3.0 | a cell phone with a number of numbers on it. |

| blip | 5.48 | a close up of a gps device mounted on the handlebar of a motorcycle |

Retro Toy Robot standing on a book

| model | time | generated caption |

|---|---|---|

| vit | 0.46 | a toy elephant sitting on top of a table |

| git | 2.31 | a robot made out of plastic |

| blip | 5.12 | there is a toy robot sitting on a black surface |

A wintery day on a British road

| model | time | generated caption |

|---|---|---|

| vit | 0.57 | a car is driving down a street next to a house |

| git | 3.23 | the house is on the market for £ 1. 5 m |

| blip | 4.91 | cars parked in a residential area with a house in the background |

I still have to pinch myself that this kind of technology is available to run on consumer hardware. At a high-level, the results are decent and fairly consistent; probably usable to start solving problems with. However, one can see some relative differences. By a slightly objective amount, it seems the slowest model blip-image-captioning-large provides the most accuracy and detail. There’s a couple cases where the faster models missed the mark with vit-gpt2-coco-en identifying the toy robot as a toy elephant and git-base-coco describing the street photo as if it is a real estate ad with a price attached!

This article is intended as an overview of what is possible with relatively little effort today that used to be considered intractable outside of research labs. With the ever-growing choice in open source models available, there might be numerous viable options for your problem. Model cards will provide more detail about the training set and specialties of each model. You can start at the Hugging Face Image to Text guide and find models from there for testing with. There are more and more specialized models available like ones trained exclusively to detect dogs vs. cats or identify medicinal plant species, that might work well for your use case.