Integrate MCP Servers in Python LLM Code

Why write a python MCP Client?

The Model Context Protocol (MCP) is a game-changer for developers integrating large language models (LLMs) with external data sources and tools. This open protocol fosters seamless integration and has seen increased adoption, notably with tools like Claude Desktop. MCP comprises two sides: clients (e.g., Claude Desktop) and servers. While servers provide the essential building blocks for enhancing LLMs with external context, clients act as the bridge, interfacing users and applications with these servers.

Writing an MCP client allows developers to:

- Leverage existing servers to provide rich context to LLMs.

- Customize interactions with LLM servers for tailored workflows.

- Experiment with the evolving MCP ecosystem and tap into its growing catalog of awesome MCP servers.

With this understanding, let's dive into writing a Python MCP client that uses OpenAPI.

Writing the Code

The following code sample is an adaptation of the MCP quickstart documentation where we replace Claude Desktop with a Python script and OpenAI API for the LLM. For more detailed guidance, refer to the official documentation.

# /// script

# dependencies = [

# "mirascope[openai]",

# "mcp-server-sqlite",

# "python-dotenv",

# ]

# ///

import dotenv

import asyncio

from mirascope.core import openai

from mirascope.mcp.client import create_mcp_client, StdioServerParameters

server_params = StdioServerParameters(

command="uvx",

args=["mcp-server-sqlite", "--db-path", "/tmp/delete-me-test.db"], # Optional command-line arguments

env=None,

)

async def main() -> None:

dotenv.load_dotenv() # Load environment variables

async with create_mcp_client(server_params) as client:

# List available prompts

prompts = await client.list_prompts()

print(prompts[0])

# Retrieve and use a prompt template

prompt_template = await client.get_prompt_template(prompts[0].name)

prompt = await prompt_template(topic="toys")

print(prompt)

# List and access resources

resources = await client.list_resources()

resource = await client.read_resource(resources[0].uri)

print(resource)

# Interact with tools

tools = await client.list_tools()

@openai.call("gpt-4o-mini", tools=tools)

def chat_sqlite(txt="") -> str:

return txt

# Loop for user input and LLM interaction

while True:

txt = input("Enter a message: ")

if txt == "q":

break

result = chat_sqlite(txt)

if tool := result.tool:

call_result = await tool.call()

print(call_result)

else:

print(result.content)

asyncio.run(main())Key Steps Explained:

Setup:

- The script initializes environment variables via dotenv.

- It defines the MCP server (mcp-server-sqlite) and its storage location.

Client Interaction:

- Prompts, resources, and tools are listed using the client object.

- Templates and tools are dynamically retrieved and applied for user-defined topics.

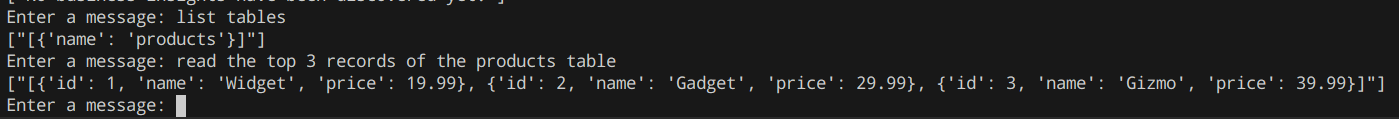

User Loop:

- The interactive loop allows users to engage with the LLM or execute tools fetched from the server.

Extensibility:

- The script can be extended with additional servers or workflows, adapting to evolving MCP capabilities.

How to run this python client

`UV` let you run this script easily.

uv run mirascope_sqlite_client.py

Start experimenting with the script to unlock the power of MCP integration and create smarter, context-aware applications. 🚀