Last month I had some time to take a closer look at how our website was performing from a pure speed perspective. It’s a pretty simple site, a bit less than 400kb total and very different from that of most of our customers.. we have few third-party scripts, zero ads, an optimised back-end that responds in <200ms, a very small JavaScript footprint and had already done the content optimisation game when we launched, combining/minimising assets, optimising images and serving them via a CDN. It was already a fast site, but could it be faster?

Step #1: Analyse and measure

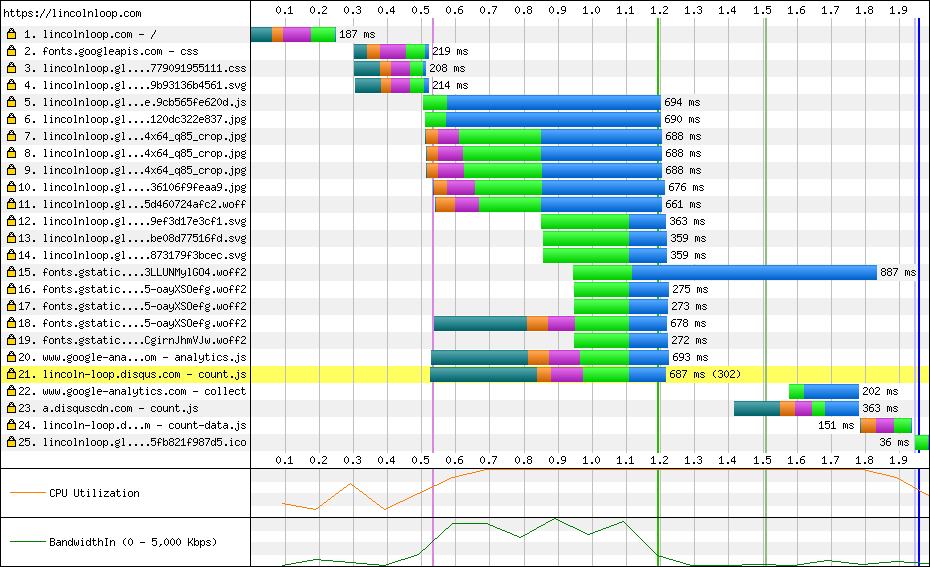

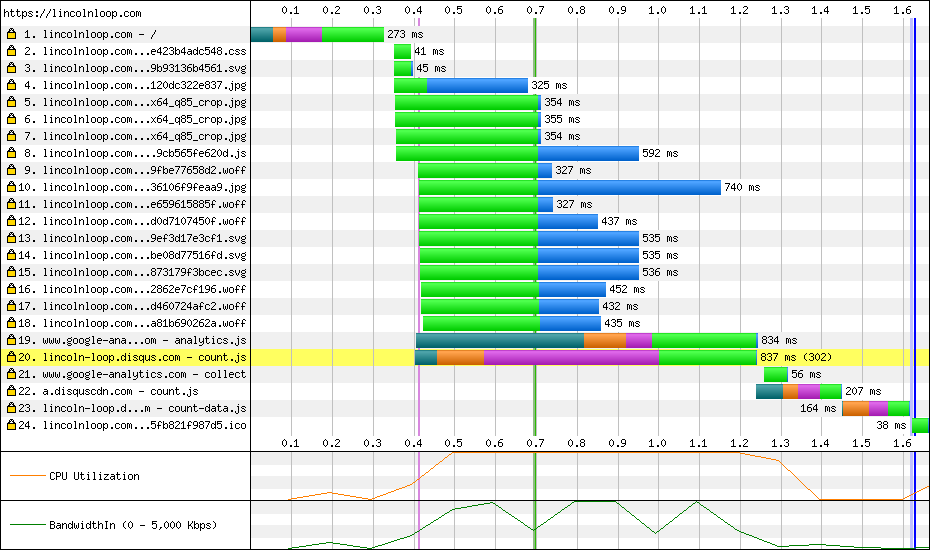

First, let’s take a look at our initial waterfall view, which we got from the awesome webpagetest.org.

We focused on optimising the first view (empty cache), since we were already had cache headers for the assets and the repeat views were really fast with only 1 request on the Critical Rendering Path.

With such a simple site, it’s clear by looking at the waterfall view, that performance can be improved if we minimize DNS lookups and external connections, specifically, for our CDN and the Google fonts we were using.

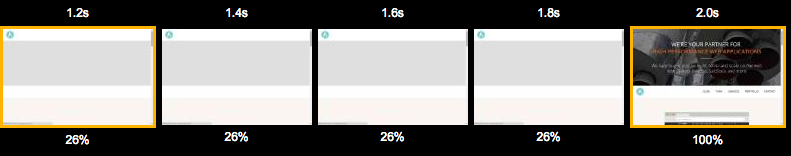

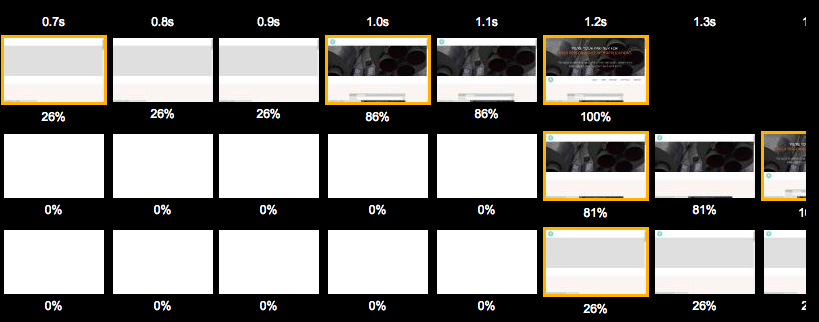

Here’s the rendering timeline, which is what reflects the user’s perceived speed of the site:

Step #2: Reduce DNS lookups by ditching the CDN and serving static files from ngnix w/ SPDY

Since the DNS lookups and establishing new connections was a major part of the bottleneck, we reduced them by serving static files directly from the lincolnloop.com domain through nginx and SPDY (which we covered a while back).

For our static files this was pretty much a flip of a switch, and with SPDY becoming widely supported by current browsers (except Safari), soon multiple-CDN sub-domains to allow for more parallel downloads might be a thing of the past.

Here’s how this changed our rendering time:

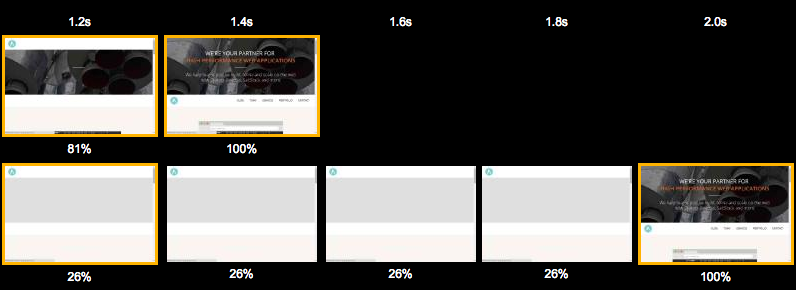

and how our waterfall view now looks like:

Step #3: Reduce DNS lookups by self-hosting custom fonts

We are using Google fonts, which by default comes with an extra two domain penalty, one for the CSS request, the other for the fonts themselves. You should look this optimisation with a grain of salt, since the tests are run with a clean cache, when in reality the user might have the CSS or font files already cached on the initial request from another site. There’s also other arguments for using Google’s CDN all the way, like optimised CSS font files for each browser and automatic font updates, so evaluate carefully if you really can benefit from this.

In our case though, we have a somewhat uncommon combination of fonts and found the CSS request to Google could take anywhere from 200ms to 1s. We tested self-hosting the CSS only, but noticed font rendering started earlier if we self-hosted them too, so we went with that, but it’s important to consider the tradeoffs.

Conclusion

The changes we made were very noticeable for the user, although the exact numbers are harder to pin down due to the normal network performance variations across our tests. For what is worth, we measured a decrease of total page rendering time from 2.0s to 1.2s, with the initial rendering now happening 0.7s into the loading process vs 1.2s previously, which is an improvement of about 40% improvement for both scenarios.

Also worth to take note is that each case is unique, not many high traffic sites have less than 30 resources and the browser requirements we have, but it does show how big performance gains can be done on the front-end with small changes that, due to SPDY, don’t necessarily follow some of the established best practices.